LDD3 学习笔记

LDD3 WEEK4

代码补充

环境

Linux rogerthat 5.19.0-46-generic #47-Ubuntu SMP PREEMPT_DYNAMIC Fri Jun 16 13:30:11 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux第五章

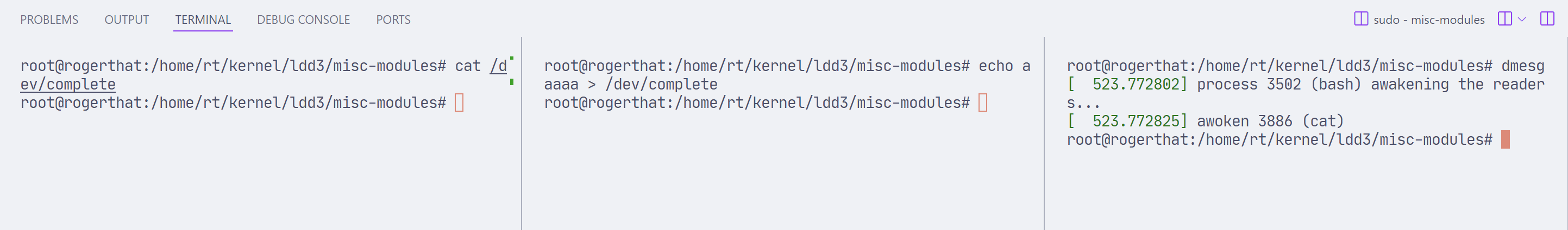

complete

轻量级的一种同步机制

#include <linux/module.h>

#include <linux/init.h>

#include <linux/sched.h> /* current and everything */

#include <linux/kernel.h> /* printk() */

#include <linux/fs.h> /* everything... */

#include <linux/types.h> /* size_t */

#include <linux/completion.h>

MODULE_LICENSE("Dual BSD/GPL");

static int complete_major = 0;

DECLARE_COMPLETION(comp);

ssize_t complete_read (struct file *filp, char __user *buf, size_t count, loff_t *pos)

{

printk(KERN_DEBUG "process %i (%s) going to sleep\n",

current->pid, current->comm);

wait_for_completion(&comp);

printk(KERN_DEBUG "awoken %i (%s)\n", current->pid, current->comm);

return 0; /* EOF */

}

ssize_t complete_write (struct file *filp, const char __user *buf, size_t count,

loff_t *pos)

{

printk(KERN_DEBUG "process %i (%s) awakening the readers...\n",

current->pid, current->comm);

complete(&comp);

return count; /* succeed, to avoid retrial */

}

struct file_operations complete_fops = {

.owner = THIS_MODULE,

.read = complete_read,

.write = complete_write,

};

int complete_init(void)

{

int result;

/*

* Register your major, and accept a dynamic number

*/

result = register_chrdev(complete_major, "complete", &complete_fops);

if (result < 0)

return result;

if (complete_major == 0)

complete_major = result; /* dynamic */

return 0;

}

void complete_cleanup(void)

{

unregister_chrdev(complete_major, "complete");

}

module_init(complete_init);

module_exit(complete_cleanup);

第六章

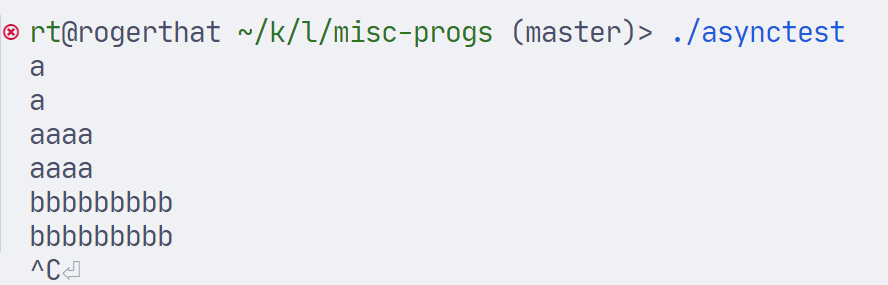

异步通知

通过 SIGIO 完成,需要使能

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

#include <signal.h>

#include <fcntl.h>

int gotdata=0;

void sighandler(int signo)

{

if (signo==SIGIO)

gotdata++;

return;

}

char buffer[4096];

int main(int argc, char **argv)

{

int count;

struct sigaction action;

memset(&action, 0, sizeof(action));

action.sa_handler = sighandler;

action.sa_flags = 0;

sigaction(SIGIO, &action, NULL);

fcntl(STDIN_FILENO, F_SETOWN, getpid());

fcntl(STDIN_FILENO, F_SETFL, fcntl(STDIN_FILENO, F_GETFL) | FASYNC);

while(1) {

/* this only returns if a signal arrives */

sleep(86400); /* one day */

if (!gotdata)

continue;

count=read(0, buffer, 4096);

/* buggy: if avail data is more than 4kbytes... */

count=write(1,buffer,count);

gotdata=0;

}

}

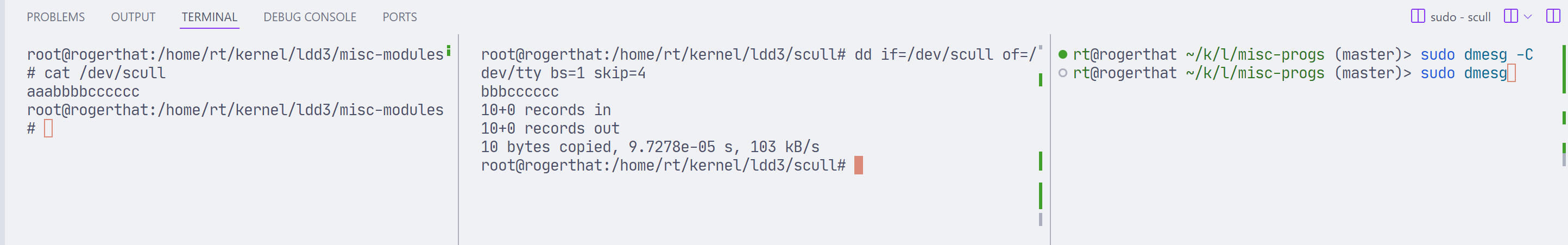

llseek

scull 代码,实现了 llseek,对于一些提供数据流的设备,不应支持 llseek

loff_t scull_llseek(struct file *filp, loff_t off, int whence)

{

struct scull_dev *dev = filp->private_data;

loff_t newpos;

switch(whence) {

case 0: /* SEEK_SET */

printk(KERN_WARNING "SEEK_SET\n");

newpos = off;

break;

case 1: /* SEEK_CUR */

printk(KERN_WARNING "SEEK_CUR\n");

newpos = filp->f_pos + off;

break;

case 2: /* SEEK_END */

printk(KERN_WARNING "SEEK_END\n");

newpos = dev->size + off;

break;

default: /* can't happen */

return -EINVAL;

}

if (newpos < 0) return -EINVAL;

filp->f_pos = newpos;

return newpos;

}进行一个简单的测试

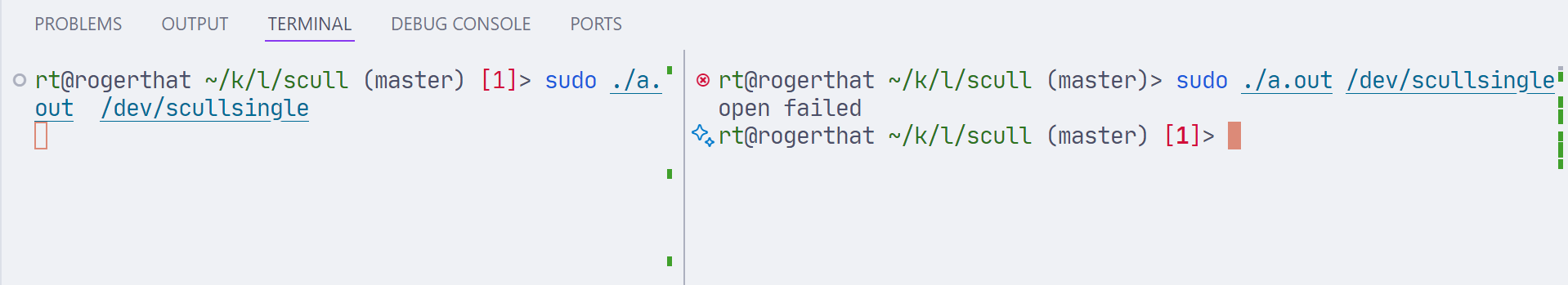

文件访问控制

简单的写一个 open <device>

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <fcntl.h>

int main(int argc, char *argv[])

{

int fd;

if (argc != 2) {

printf("Usage: %s <device>\n", argv[0]);

exit(1);

}

fd = open(argv[1], O_RDWR);

if (fd < 0) {

printf("open failed\n");

exit(1);

}

sleep(100);

printf("close file\n");

close(fd);

return 0;

}scullsingle

static struct scull_dev scull_s_device;

static atomic_t scull_s_available = ATOMIC_INIT(1);

static int scull_s_open(struct inode *inode, struct file *filp)

{

struct scull_dev *dev = &scull_s_device; /* device information */

if (! atomic_dec_and_test (&scull_s_available)) {

atomic_inc(&scull_s_available);

return -EBUSY; /* already open */

}

/* then, everything else is copied from the bare scull device */

if ( (filp->f_flags & O_ACCMODE) == O_WRONLY)

scull_trim(dev);

filp->private_data = dev;

return 0; /* success */

}

static int scull_s_release(struct inode *inode, struct file *filp)

{

atomic_inc(&scull_s_available); /* release the device */

return 0;

}

可以看出不同第二个打开失败了。

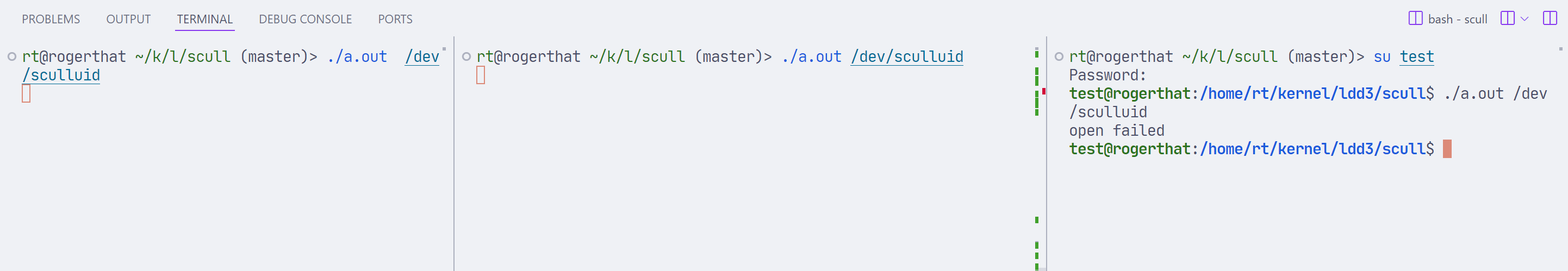

sculluid

static struct scull_dev scull_u_device;

static int scull_u_count; /* initialized to 0 by default */

static uid_t scull_u_owner; /* initialized to 0 by default */

static DEFINE_SPINLOCK(scull_u_lock);

static int scull_u_open(struct inode *inode, struct file *filp)

{

struct scull_dev *dev = &scull_u_device; /* device information */

spin_lock(&scull_u_lock);

if (scull_u_count &&

(scull_u_owner != current_uid().val) && /* allow user */

(scull_u_owner != current_euid().val) && /* allow whoever did su */

!capable(CAP_DAC_OVERRIDE)) { /* still allow root */

spin_unlock(&scull_u_lock);

return -EBUSY; /* -EPERM would confuse the user */

}

if (scull_u_count == 0)

scull_u_owner = current_uid().val; /* grab it */

scull_u_count++;

spin_unlock(&scull_u_lock);

/* then, everything else is copied from the bare scull device */

if ((filp->f_flags & O_ACCMODE) == O_WRONLY)

scull_trim(dev);

filp->private_data = dev;

return 0; /* success */

}比较清晰

scullpriv

/************************************************************************

*

* Finally the `cloned' private device. This is trickier because it

* involves list management, and dynamic allocation.

*/

/* The clone-specific data structure includes a key field */

struct scull_listitem {

struct scull_dev device;

dev_t key;

struct list_head list;

};

/* The list of devices, and a lock to protect it */

static LIST_HEAD(scull_c_list);

static DEFINE_SPINLOCK(scull_c_lock);

/* A placeholder scull_dev which really just holds the cdev stuff. */

static struct scull_dev scull_c_device;

/* Look for a device or create one if missing */

static struct scull_dev *scull_c_lookfor_device(dev_t key)

{

struct scull_listitem *lptr;

list_for_each_entry(lptr, &scull_c_list, list) {

if (lptr->key == key)

return &(lptr->device);

}

/* not found */

lptr = kmalloc(sizeof(struct scull_listitem), GFP_KERNEL);

if (!lptr)

return NULL;

/* initialize the device */

memset(lptr, 0, sizeof(struct scull_listitem));

lptr->key = key;

scull_trim(&(lptr->device)); /* initialize it */

mutex_init(&lptr->device.lock);

/* place it in the list */

list_add(&lptr->list, &scull_c_list);

return &(lptr->device);

}

static int scull_c_open(struct inode *inode, struct file *filp)

{

struct scull_dev *dev;

dev_t key;

if (!current->signal->tty) {

PDEBUG("Process \"%s\" has no ctl tty\n", current->comm);

return -EINVAL;

}

key = tty_devnum(current->signal->tty);

/* look for a scullc device in the list */

spin_lock(&scull_c_lock);

dev = scull_c_lookfor_device(key);

spin_unlock(&scull_c_lock);

if (!dev)

return -ENOMEM;

/* then, everything else is copied from the bare scull device */

if ( (filp->f_flags & O_ACCMODE) == O_WRONLY)

scull_trim(dev);

filp->private_data = dev;

return 0; /* success */

}

static int scull_c_release(struct inode *inode, struct file *filp)

{

/*

* Nothing to do, because the device is persistent.

* A `real' cloned device should be freed on last close

*/

return 0;

}把不同的 tty 设备当作 key。实际使用中,区分了 pts

rt@rogerthat ~/k/l/scull (master)> sudo dmesg

[12841.367120] tty key: major: 136, minor: 10

[12842.179333] tty key: major: 136, minor: 11第七章

时间API

#include <linux/module.h>

#include <linux/moduleparam.h>

#include <linux/init.h>

#include <linux/kernel.h> /* printk() */

#include <linux/slab.h> /* kmalloc() */

#include <linux/fs.h> /* everything... */

#include <linux/errno.h> /* error codes */

#include <linux/types.h> /* size_t */

#include <linux/proc_fs.h>

#include <linux/fcntl.h> /* O_ACCMODE */

#include <linux/uaccess.h> /* copy_*_user */

#include <linux/jiffies.h>

#include <linux/time.h>

MODULE_AUTHOR("RT");

MODULE_LICENSE("Dual BSD/GPL");

unsigned long t0,t1 = 0;

module_param(t0, long, S_IRUGO);

module_param(t1, long, S_IRUGO);

int do_time(void);

static int timer_init_module(void)

{

t0 = jiffies;

printk(KERN_INFO "jiffies t0: %lu\n", t0);

printk(KERN_INFO "Timer module loaded\n");

do_time_api();

return 0;

}

static void timer_cleanup_module(void)

{

t1 = jiffies;

printk(KERN_INFO "jiffies t1: %lu\n", t1);

printk(KERN_INFO "diff msec: %lu\n", (t1-t0)*1000/HZ);

if (time_after(t1, t0)) {

printk(KERN_INFO "t1 after t0\n");

}

printk(KERN_INFO "Timer module unloaded\n");

}

int do_time_api(void)

{

struct timespec64 tv1;

struct timespec64 tv2;

unsigned long j1;

u64 j2;

/* get them four */

j1 = jiffies;

j2 = get_jiffies_64();

ktime_get_real_ts64(&tv1);

ktime_get_coarse_real_ts64(&tv2);

printk(KERN_INFO "0x%08lx 0x%016Lx\n"

"%10i.%09i\n"

"%40i.%09i\n",

j1, j2,

(int) tv1.tv_sec, (int) tv1.tv_nsec,

(int) tv2.tv_sec, (int) tv2.tv_nsec);

return 0;

}

module_init(timer_init_module);

module_exit(timer_cleanup_module);[27344.420102] jiffies t0: 4301728356

[27344.420105] Timer module loaded

[27344.420114] 0x100672a64 0x0000000100672a64

1715866818.547302295

1715866818.545032262

[27347.184635] jiffies t1: 4301729047

[27347.184644] diff msec: 2764

[27347.184645] t1 after t0

[27347.184645] Timer module unloadedtimer

void jit_timer_fn(struct timer_list *t)

{

struct jit_data *data = from_timer(data, t, timer);

unsigned long j = jiffies;

seq_printf(data->m, "%9li %3li %i %6i %i %s\n",

j, j - data->prevjiffies, in_interrupt() ? 1 : 0,

current->pid, smp_processor_id(), current->comm);

if (--data->loops) {

data->timer.expires += tdelay;

data->prevjiffies = j;

add_timer(&data->timer);

} else {

wake_up_interruptible(&data->wait);

}

}

/* the /proc function: allocate everything to allow concurrency */

int jit_timer_show(struct seq_file *m, void *v)

{

struct jit_data *data;

unsigned long j = jiffies;

data = kmalloc(sizeof(*data), GFP_KERNEL);

if (!data)

return -ENOMEM;

init_waitqueue_head(&data->wait);

/* write the first lines in the buffer */

seq_puts(m, " time delta inirq pid cpu command\n");

seq_printf(m, "%9li %3li %i %6i %i %s\n",

j, 0L, in_interrupt() ? 1 : 0,

current->pid, smp_processor_id(), current->comm);

/* fill the data for our timer function */

data->prevjiffies = j;

data->m = m;

data->loops = JIT_ASYNC_LOOPS;

/* register the timer */

timer_setup(&data->timer, jit_timer_fn, 0);

data->timer.expires = j + tdelay; /* parameter */

add_timer(&data->timer);

/* wait for the buffer to fill */

wait_event_interruptible(data->wait, !data->loops);

if (signal_pending(current))

return -ERESTARTSYS;

kfree(data);

return 0;

}result

rt@rogerthat ~/k/l/misc-modules (master)> cat /proc/jitimer

time delta inirq pid cpu command

4302300677 0 0 47728 7 cat

4302300689 12 1 0 7 swapper/7

4302300698 9 1 0 7 swapper/7

4302300708 10 1 0 7 swapper/7

4302300718 10 1 0 7 swapper/7

4302300728 10 1 0 7 swapper/710 个时钟周期左右,swapper/7 调度一次,共 5 次

tasklet

void jit_tasklet_fn(unsigned long arg)

{

struct jit_data *data = (struct jit_data *)arg;

unsigned long j = jiffies;

seq_printf(data->m, "%9li %3li %i %6i %i %s\n",

j, j - data->prevjiffies, in_interrupt() ? 1 : 0,

current->pid, smp_processor_id(), current->comm);

if (--data->loops) {

data->prevjiffies = j;

if (data->hi)

tasklet_hi_schedule(&data->tlet);

else

tasklet_schedule(&data->tlet);

} else {

wake_up_interruptible(&data->wait);

}

}

/* the /proc function: allocate everything to allow concurrency */

int jit_tasklet_show(struct seq_file *m, void *v)

{

struct jit_data *data;

unsigned long j = jiffies;

long hi = (long)m->private;

data = kmalloc(sizeof(*data), GFP_KERNEL);

if (!data)

return -ENOMEM;

init_waitqueue_head(&data->wait);

/* write the first lines in the buffer */

seq_puts(m, " time delta inirq pid cpu command\n");

seq_printf(m, "%9li %3li %i %6i %i %s\n",

j, 0L, in_interrupt() ? 1 : 0,

current->pid, smp_processor_id(), current->comm);

/* fill the data for our tasklet function */

data->prevjiffies = j;

data->m = m;

data->loops = JIT_ASYNC_LOOPS;

/* register the tasklet */

tasklet_init(&data->tlet, jit_tasklet_fn, (unsigned long)data);

data->hi = hi;

if (hi)

tasklet_hi_schedule(&data->tlet);

else

tasklet_schedule(&data->tlet);

/* wait for the buffer to fill */

wait_event_interruptible(data->wait, !data->loops);

if (signal_pending(current))

return -ERESTARTSYS;

kfree(data);

return 0;

}result

rt@rogerthat ~/k/l/misc-modules (master)> cat /proc/jitasklet

time delta inirq pid cpu command

4302528075 0 0 49279 5 cat

4302528075 0 1 47 5 ksoftirqd/5

4302528075 0 1 47 5 ksoftirqd/5

4302528075 0 1 47 5 ksoftirqd/5

4302528075 0 1 47 5 ksoftirqd/5

4302528075 0 1 47 5 ksoftirqd/5第八章

命名 scullo,抄袭 GPT4o 命名方式

scullo 改改改?!

先说结论,踩雷

Linux内核有一个usercopy whitelist机制,只允许这里面的region来做usercopy。如果是用kmem_cache_create申请的kmem_cache申请的内存空间来copy to user或者copy from user,那么就会报这个错。这时要用kmem_cache_create_usercopy,来将申请的区域加入到usercopy whitelist中。

kmem_cache_create_usercopy的参数中offset是指region相对于申请的内存的首地址的偏移量。如果整个区域都是,那么就设置为0。

核心的分配代码

if (scullo_type == scullv)

dptr->data[s_pos] = (void *)vmalloc(PAGE_SIZE << dptr->order);

else if (scullo_type == scullp)

dptr->data[s_pos] = (void *)__get_free_pages(GFP_KERNEL, dptr->order);

else if (scullo_type == scullc)

dptr->data[s_pos] = (void *)kmem_cache_alloc(scullo_cache, GFP_KERNEL);核心的释放代码

if (dptr->data[i]) {

if (scullo_type == scullv)

vfree(dptr->data[i]);

else if (scullo_type == scullp)

free_pages((unsigned long)dptr->data[i], dptr->order);

else if (scullo_type == scullc)

kmem_cache_free(scullo_cache, dptr->data[i]);完整代码,基本就是找找 diff,改改改了,没有太大区别,就是 scullc 有一些接口不支持了,比如 .nopage

main.c

/* -*- C -*-

* main.c -- the bare scullo char module

*

* Copyright (C) 2001 Alessandro Rubini and Jonathan Corbet

* Copyright (C) 2001 O'Reilly & Associates

*

* The source code in this file can be freely used, adapted,

* and redistributed in source or binary form, so long as an

* acknowledgment appears in derived source files. The citation

* should list that the code comes from the book "Linux Device

* Drivers" by Alessandro Rubini and Jonathan Corbet, published

* by O'Reilly & Associates. No warranty is attached;

* we cannot take responsibility for errors or fitness for use.

*

* $Id: _main.c.in,v 1.21 2004/10/14 20:11:39 corbet Exp $

*/

#include <linux/module.h>

#include <linux/moduleparam.h>

#include <linux/init.h>

#include <linux/kernel.h> /* printk() */

#include <linux/slab.h> /* kmalloc() */

#include <linux/fs.h> /* everything... */

#include <linux/errno.h> /* error codes */

#include <linux/types.h> /* size_t */

#include <linux/proc_fs.h>

#include <linux/seq_file.h>

#include <linux/fcntl.h> /* O_ACCMODE */

#include <linux/aio.h>

#include <linux/uaccess.h>

#include <linux/uio.h> /* ivo_iter* */

#include <linux/version.h>

#include <linux/mutex.h>

#include <linux/vmalloc.h>

#include "scull-shared/scull-async.h"

#include "scullo.h" /* local definitions */

#include "access_ok_version.h"

#include "proc_ops_version.h"

int scullo_major = SCULLO_MAJOR;

int scullo_devs = SCULLO_DEVS; /* number of bare scullo devices */

int scullo_qset = SCULLO_QSET;

int scullo_quantum = SCULLO_QUANTUM;

int scullo_order = 4;

int scullp_order = 1;

int scullv_order = 4;

int scullo_type = scullv;

module_param(scullo_type, int, 0);

module_param(scullo_major, int, 0);

module_param(scullo_devs, int, 0);

module_param(scullo_qset, int, 0);

module_param(scullo_order, int, 0);

module_param(scullo_quantum, int, 0);

MODULE_AUTHOR("Alessandro Rubini");

MODULE_LICENSE("Dual BSD/GPL");

struct scullo_dev *scullo_devices; /* allocated in scullo_init */

int scullo_trim(struct scullo_dev *dev);

void scullo_cleanup(void);

/* declare one cache pointer: use it for all devices */

struct kmem_cache *scullo_cache;

#ifdef SCULLO_USE_PROC /* don't waste space if unused */

/*

* The proc filesystem: function to read and entry

*/

/* FIXME: Do we need this here?? It be ugly */

int scullo_read_procmem(struct seq_file *m, void *v)

{

int i, j, quantum, order, qset;

int limit = m->size - 80; /* Don't print more than this */

struct scullo_dev *d;

for(i = 0; i < scullo_devs; i++) {

d = &scullo_devices[i];

if (mutex_lock_interruptible (&d->mutex))

return -ERESTARTSYS;

qset = d->qset; /* retrieve the features of each device */

if (scullo_type == scullv || scullo_type == scullp) {

order = d->order;

seq_printf(m,"\nDevice %i: qset %i, order %i, sz %li\n",

i, qset, order, (long)(d->size));

} else if (scullo_type == scullc) {

quantum = d->quantum;

seq_printf(m,"\nDevice %i: qset %i, quantum %i, sz %li\n",

i, qset, quantum, (long)(d->size));

}

for (; d; d = d->next) { /* scan the list */

seq_printf(m," item at %p, qset at %p\n",d,d->data);

if (m->count > limit)

goto out;

if (d->data && !d->next) /* dump only the last item - save space */

for (j = 0; j < qset; j++) {

if (d->data[j])

seq_printf(m," % 4i:%8p\n",j,d->data[j]);

if (m->count > limit)

goto out;

}

}

out:

mutex_unlock (&scullo_devices[i].mutex);

if (m->count > limit)

break;

}

return 0;

}

static int scullo_proc_open(struct inode *inode, struct file *file)

{

return single_open(file, scullo_read_procmem, NULL);

}

static struct file_operations scullo_proc_ops = {

.owner = THIS_MODULE,

.open = scullo_proc_open,

.read = seq_read,

.llseek = seq_lseek,

.release = single_release

};

#endif /* SCULLO_USE_PROC */

/*

* Open and close

*/

int scullo_open (struct inode *inode, struct file *filp)

{

struct scullo_dev *dev; /* device information */

/* Find the device */

dev = container_of(inode->i_cdev, struct scullo_dev, cdev);

/* now trim to 0 the length of the device if open was write-only */

if ( (filp->f_flags & O_ACCMODE) == O_WRONLY) {

if (mutex_lock_interruptible(&dev->mutex))

return -ERESTARTSYS;

scullo_trim(dev); /* ignore errors */

mutex_unlock(&dev->mutex);

}

/* and use filp->private_data to point to the device data */

filp->private_data = dev;

return 0; /* success */

}

int scullo_release (struct inode *inode, struct file *filp)

{

return 0;

}

/*

* Follow the list

*/

struct scullo_dev *scullo_follow(struct scullo_dev *dev, int n)

{

while (n--) {

if (!dev->next) {

dev->next = kmalloc(sizeof(struct scullo_dev), GFP_KERNEL);

memset(dev->next, 0, sizeof(struct scullo_dev));

}

dev = dev->next;

continue;

}

return dev;

}

/*

* Data management: read and write

*/

ssize_t scullo_read (struct file *filp, char __user *buf, size_t count,

loff_t *f_pos)

{

struct scullo_dev *dev = filp->private_data; /* the first listitem */

struct scullo_dev *dptr;

int quantum = 0;

if (scullo_type == scullv || scullo_type == scullp) {

quantum = PAGE_SIZE << dev->order;

} else if (scullo_type == scullc) {

quantum = dev->quantum;

}

int qset = dev->qset;

int itemsize = quantum * qset; /* how many bytes in the listitem */

int item, s_pos, q_pos, rest;

ssize_t retval = 0;

if (mutex_lock_interruptible(&dev->mutex))

return -ERESTARTSYS;

if (*f_pos > dev->size)

goto nothing;

if (*f_pos + count > dev->size)

count = dev->size - *f_pos;

/* find listitem, qset index, and offset in the quantum */

item = ((long) *f_pos) / itemsize;

rest = ((long) *f_pos) % itemsize;

s_pos = rest / quantum; q_pos = rest % quantum;

/* follow the list up to the right position (defined elsewhere) */

dptr = scullo_follow(dev, item);

if (!dptr->data)

goto nothing; /* don't fill holes */

if (!dptr->data[s_pos])

goto nothing;

if (count > quantum - q_pos)

count = quantum - q_pos; /* read only up to the end of this quantum */

if (copy_to_user (buf, dptr->data[s_pos]+q_pos, count)) {

retval = -EFAULT;

goto nothing;

}

mutex_unlock(&dev->mutex);

*f_pos += count;

return count;

nothing:

mutex_unlock(&dev->mutex);

return retval;

}

ssize_t scullo_write (struct file *filp, const char __user *buf, size_t count,

loff_t *f_pos)

{

struct scullo_dev *dev = filp->private_data;

struct scullo_dev *dptr;

int quantum = 0;

if (scullo_type == scullv || scullo_type == scullp) {

quantum = PAGE_SIZE << dev->order;

} else if (scullo_type == scullc) {

quantum = dev->quantum;

}

int qset = dev->qset;

int itemsize = quantum * qset;

int item, s_pos, q_pos, rest;

ssize_t retval = -ENOMEM; /* our most likely error */

if (mutex_lock_interruptible(&dev->mutex))

return -ERESTARTSYS;

/* find listitem, qset index and offset in the quantum */

item = ((long) *f_pos) / itemsize;

rest = ((long) *f_pos) % itemsize;

s_pos = rest / quantum; q_pos = rest % quantum;

/* follow the list up to the right position */

dptr = scullo_follow(dev, item);

if (!dptr->data) {

dptr->data = kmalloc(qset * sizeof(void *), GFP_KERNEL);

if (!dptr->data)

goto nomem;

memset(dptr->data, 0, qset * sizeof(char *));

}

/* Allocate a quantum using virtual addresses */

if (!dptr->data[s_pos]) {

if (scullo_type == scullv)

dptr->data[s_pos] = (void *)vmalloc(PAGE_SIZE << dptr->order);

else if (scullo_type == scullp)

dptr->data[s_pos] = (void *)__get_free_pages(GFP_KERNEL, dptr->order);

else if (scullo_type == scullc)

dptr->data[s_pos] = (void *)kmem_cache_alloc(scullo_cache, GFP_KERNEL);

if (!dptr->data[s_pos])

goto nomem;

if (scullo_type == scullv || scullo_type == scullp)

memset(dptr->data[s_pos], 0, PAGE_SIZE << dptr->order);

else if (scullo_type == scullc) {

printk(KERN_WARNING "Test1.1 %d %p\n", scullo_quantum, dptr->data[s_pos]);

memset(dptr->data[s_pos], 0, scullo_quantum);

}

}

if (count > quantum - q_pos)

count = quantum - q_pos; /* write only up to the end of this quantum */

if (copy_from_user (dptr->data[s_pos]+q_pos, buf, count)) {

retval = -EFAULT;

goto nomem;

}

*f_pos += count;

printk(KERN_WARNING "Test4\n");

/* update the size */

if (dev->size < *f_pos)

dev->size = *f_pos;

mutex_unlock(&dev->mutex);

return count;

nomem:

mutex_unlock(&dev->mutex);

return retval;

}

/*

* The ioctl() implementation

*/

long scullo_ioctl (struct file *filp, unsigned int cmd, unsigned long arg)

{

int err = 0, ret = 0, tmp;

/* don't even decode wrong cmds: better returning ENOTTY than EFAULT */

if (_IOC_TYPE(cmd) != SCULLO_IOC_MAGIC) return -ENOTTY;

if (_IOC_NR(cmd) > SCULLO_IOC_MAXNR) return -ENOTTY;

/*

* the type is a bitmask, and VERIFY_WRITE catches R/W

* transfers. Note that the type is user-oriented, while

* verify_area is kernel-oriented, so the concept of "read" and

* "write" is reversed

*/

if (_IOC_DIR(cmd) & _IOC_READ)

err = !access_ok_wrapper(VERIFY_WRITE, (void __user *)arg, _IOC_SIZE(cmd));

else if (_IOC_DIR(cmd) & _IOC_WRITE)

err = !access_ok_wrapper(VERIFY_READ, (void __user *)arg, _IOC_SIZE(cmd));

if (err)

return -EFAULT;

switch(cmd) {

case SCULLO_IOCRESET:

scullo_qset = SCULLO_QSET;

if (scullo_type == scullv)

scullo_order = scullv_order;

else if (scullo_type == scullp)

scullo_order = scullp_order;

else if (scullo_type == scullc)

scullo_quantum = SCULLO_QUANTUM;

break;

case SCULLO_IOCSORDER: /* Set: arg points to the value */

if (scullo_type == scullv || scullo_type == scullp)

ret = __get_user(scullo_order, (int __user *) arg);

else if (scullo_type == scullc)

ret = __get_user(scullo_quantum, (int __user *) arg);

break;

case SCULLO_IOCTORDER: /* Tell: arg is the value */

if (scullo_type == scullv || scullo_type == scullp)

scullo_order = arg;

else if (scullo_type == scullc)

scullo_quantum = arg;

break;

case SCULLO_IOCGORDER: /* Get: arg is pointer to result */

if (scullo_type == scullv || scullo_type == scullp)

ret = __put_user(scullo_order, (int __user *) arg);

else if (scullo_type == scullc)

ret = __put_user(scullo_quantum, (int __user *) arg);

break;

case SCULLO_IOCQORDER: /* Query: return it (it's positive) */

if (scullo_type == scullv || scullo_type == scullp)

return scullo_order;

else

return scullo_quantum;

case SCULLO_IOCXORDER: /* eXchange: use arg as pointer */

if (scullo_type == scullv || scullo_type == scullp) {

tmp = scullo_order;

ret = __get_user(scullo_order, (int __user *) arg);

if (ret == 0)

ret = __put_user(tmp, (int __user *) arg);

} else if (scullo_type == scullc) {

tmp = scullo_quantum;

ret = __get_user(scullo_quantum, (int __user *) arg);

if (ret == 0)

ret = __put_user(tmp, (int __user *) arg);

}

break;

case SCULLO_IOCHORDER: /* sHift: like Tell + Query */

if (scullo_type == scullv || scullo_type == scullp) {

tmp = scullo_order;

scullo_order = arg;

} else if (scullo_type == scullc) {

tmp = scullo_quantum;

scullo_quantum = arg;

}

return tmp;

case SCULLO_IOCSQSET:

ret = __get_user(scullo_qset, (int __user *) arg);

break;

case SCULLO_IOCTQSET:

scullo_qset = arg;

break;

case SCULLO_IOCGQSET:

ret = __put_user(scullo_qset, (int __user *)arg);

break;

case SCULLO_IOCQQSET:

return scullo_qset;

case SCULLO_IOCXQSET:

tmp = scullo_qset;

ret = __get_user(scullo_qset, (int __user *)arg);

if (ret == 0)

ret = __put_user(tmp, (int __user *)arg);

break;

case SCULLO_IOCHQSET:

tmp = scullo_qset;

scullo_qset = arg;

return tmp;

default: /* redundant, as cmd was checked against MAXNR */

return -ENOTTY;

}

return ret;

}

/*

* The "extended" operations

*/

loff_t scullo_llseek (struct file *filp, loff_t off, int whence)

{

struct scullo_dev *dev = filp->private_data;

long newpos;

switch(whence) {

case 0: /* SEEK_SET */

newpos = off;

break;

case 1: /* SEEK_CUR */

newpos = filp->f_pos + off;

break;

case 2: /* SEEK_END */

newpos = dev->size + off;

break;

default: /* can't happen */

return -EINVAL;

}

if (newpos<0) return -EINVAL;

filp->f_pos = newpos;

return newpos;

}

/*

* Mmap *is* available, but confined in a different file

*/

extern int scullo_mmap(struct file *filp, struct vm_area_struct *vma);

/*

* The fops

*/

struct file_operations scullo_fops = {

.owner = THIS_MODULE,

.llseek = scullo_llseek,

.read = scullo_read,

.write = scullo_write,

.unlocked_ioctl = scullo_ioctl,

.mmap = scullo_mmap,

.open = scullo_open,

.release = scullo_release,

.read_iter = scull_read_iter,

.write_iter = scull_write_iter,

};

int scullo_trim(struct scullo_dev *dev)

{

struct scullo_dev *next, *dptr;

int qset = dev->qset; /* "dev" is not-null */

int i;

if (dev->vmas) /* don't trim: there are active mappings */

return -EBUSY;

for (dptr = dev; dptr; dptr = next) { /* all the list items */

if (dptr->data) {

/* Release the quantum-set */

for (i = 0; i < qset; i++)

if (dptr->data[i]) {

if (scullo_type == scullv)

vfree(dptr->data[i]);

else if (scullo_type == scullp)

free_pages((unsigned long)dptr->data[i], dptr->order);

else if (scullo_type == scullc)

kmem_cache_free(scullo_cache, dptr->data[i]);

}

kfree(dptr->data);

dptr->data=NULL;

}

next=dptr->next;

if (dptr != dev) kfree(dptr); /* all of them but the first */

}

dev->size = 0;

dev->qset = scullo_qset;

if (scullo_type == scullv || scullo_type == scullp)

dev->order = scullo_order;

else if (scullo_type == scullc)

dev->quantum = scullo_quantum;

dev->next = NULL;

return 0;

}

static void scullo_setup_cdev(struct scullo_dev *dev, int index)

{

int err, devno = MKDEV(scullo_major, index);

cdev_init(&dev->cdev, &scullo_fops);

dev->cdev.owner = THIS_MODULE;

if (scullo_type == scullp) {

dev->cdev.ops = &scullo_fops;

}

err = cdev_add (&dev->cdev, devno, 1);

/* Fail gracefully if need be */

if (err)

printk(KERN_NOTICE "Error %d adding scull%d", err, index);

}

/*

* Finally, the module stuff

*/

int scullo_init(void)

{

int result, i;

dev_t dev = MKDEV(scullo_major, 0);

/*

* Register your major, and accept a dynamic number.

*/

if (scullo_major)

result = register_chrdev_region(dev, scullo_devs, "scullo");

else {

result = alloc_chrdev_region(&dev, 0, scullo_devs, "scullo");

scullo_major = MAJOR(dev);

}

if (result < 0)

return result;

/*

* allocate the devices -- we can't have them static, as the number

* can be specified at load time

*/

scullo_devices = kmalloc(scullo_devs*sizeof (struct scullo_dev), GFP_KERNEL);

if (!scullo_devices) {

result = -ENOMEM;

goto fail_malloc;

}

memset(scullo_devices, 0, scullo_devs*sizeof (struct scullo_dev));

for (i = 0; i < scullo_devs; i++) {

if (scullo_type == scullv || scullo_type == scullp) {

scullo_devices[i].order = scullo_order;

} else if (scullo_type == scullc) {

scullo_devices[i].quantum = scullo_quantum;

}

scullo_devices[i].qset = scullo_qset;

mutex_init(&scullo_devices[i].mutex);

scullo_setup_cdev(scullo_devices + i, i);

}

if (scullo_type == scullc) {

// scullo_cache = kmem_cache_create("scullo", scullo_quantum, 0, SLAB_HWCACHE_ALIGN, NULL);

scullo_cache = kmem_cache_create_usercopy("scullo", scullo_quantum, 0, SLAB_HWCACHE_ALIGN, 0, scullo_quantum, NULL); /* no ctor/dtor */

if (!scullo_cache) {

scullo_cleanup();

return -ENOMEM;

}

}

#ifdef SCULLO_USE_PROC /* only when available */

proc_create("scullomem", 0, NULL, proc_ops_wrapper(&scullo_proc_ops, scullo_pops));

#endif

return 0; /* succeed */

fail_malloc:

unregister_chrdev_region(dev, scullo_devs);

return result;

}

void scullo_cleanup(void)

{

int i;

#ifdef SCULLO_USE_PROC

remove_proc_entry("scullomem", NULL);

#endif

for (i = 0; i < scullo_devs; i++) {

cdev_del(&scullo_devices[i].cdev);

scullo_trim(scullo_devices + i);

}

kfree(scullo_devices);

if (scullo_type == scullc && scullo_cache)

kmem_cache_destroy(scullo_cache);

unregister_chrdev_region(MKDEV (scullo_major, 0), scullo_devs);

}

module_init(scullo_init);

module_exit(scullo_cleanup);mmap.c

/* -*- C -*-

* mmap.c -- memory mapping for the scullo char module

*

* Copyright (C) 2001 Alessandro Rubini and Jonathan Corbet

* Copyright (C) 2001 O'Reilly & Associates

*

* The source code in this file can be freely used, adapted,

* and redistributed in source or binary form, so long as an

* acknowledgment appears in derived source files. The citation

* should list that the code comes from the book "Linux Device

* Drivers" by Alessandro Rubini and Jonathan Corbet, published

* by O'Reilly & Associates. No warranty is attached;

* we cannot take responsibility for errors or fitness for use.

*

* $Id: _mmap.c.in,v 1.13 2004/10/18 18:07:36 corbet Exp $

*/

#include <linux/module.h>

#include <linux/mm.h> /* everything */

#include <linux/errno.h> /* error codes */

#include <asm/pgtable.h>

#include <linux/version.h>

#include <linux/fs.h>

#include "scullo.h" /* local definitions */

/*

* open and close: just keep track of how many times the device is

* mapped, to avoid releasing it.

*/

void scullo_vma_open(struct vm_area_struct *vma)

{

struct scullo_dev *dev = vma->vm_private_data;

dev->vmas++;

}

void scullo_vma_close(struct vm_area_struct *vma)

{

struct scullo_dev *dev = vma->vm_private_data;

dev->vmas--;

}

/*

* The nopage method: the core of the file. It retrieves the

* page required from the scullo device and returns it to the

* user. The count for the page must be incremented, because

* it is automatically decremented at page unmap.

*

* For this reason, "order" must be zero. Otherwise, only the first

* page has its count incremented, and the allocating module must

* release it as a whole block. Therefore, it isn't possible to map

* pages from a multipage block: when they are unmapped, their count

* is individually decreased, and would drop to 0.

*/

#if LINUX_VERSION_CODE < KERNEL_VERSION(4,17,0)

typedef int vm_fault_t;

#endif

static vm_fault_t scullo_vma_nopage(struct vm_fault *vmf)

{

unsigned long offset;

struct vm_area_struct *vma = vmf->vma;

struct scullo_dev *ptr, *dev = vma->vm_private_data;

struct page *page = NULL;

void *pageptr = NULL; /* default to "missing" */

vm_fault_t retval = VM_FAULT_NOPAGE;

mutex_lock(&dev->mutex);

offset = (unsigned long)(vmf->address - vma->vm_start) + (vma->vm_pgoff << PAGE_SHIFT);

if (offset >= dev->size) goto out; /* out of range */

/*

* Now retrieve the scullo device from the list,then the page.

* If the device has holes, the process receives a SIGBUS when

* accessing the hole.

*/

offset >>= PAGE_SHIFT; /* offset is a number of pages */

for (ptr = dev; ptr && offset >= dev->qset;) {

ptr = ptr->next;

offset -= dev->qset;

}

if (ptr && ptr->data) pageptr = ptr->data[offset];

if (!pageptr) goto out; /* hole or end-of-file */

/*

* After scullo lookup, "page" is now the address of the page

* needed by the current process. Since it's a vmalloc address,

* turn it into a struct page.

*/

if (scullo_type == scullv) {

page = vmalloc_to_page(pageptr);

}else if (scullo_type == scullp) {

page = virt_to_page(pageptr);

}

/* got it, now increment the count */

get_page(page);

vmf->page = page;

retval = 0;

out:

mutex_unlock(&dev->mutex);

return retval;

}

struct vm_operations_struct scullo_vm_ops = {

.open = scullo_vma_open,

.close = scullo_vma_close,

.fault = scullo_vma_nopage,

};

// struct vm_operations_struct scullc_vm_ops = {

// .open = scullo_vma_open,

// .close = scullo_vma_close,

// .nopage = scullc_vma_nopage,

// };

int scullo_mmap(struct file *filp, struct vm_area_struct *vma)

{

struct inode *inode = filp->f_path.dentry->d_inode;

/* refuse to map if order is not 0 */

if (scullo_devices[iminor(inode)].order)

return -ENODEV;

vma->vm_ops = &scullo_vm_ops;

vma->vm_private_data = filp->private_data;

scullo_vma_open(vma);

return 0;

}scullo.h

/* -*- C -*-

* scullo.h -- definitions for the scullo char module

*

* Copyright (C) 2001 Alessandro Rubini and Jonathan Corbet

* Copyright (C) 2001 O'Reilly & Associates

*

* The source code in this file can be freely used, adapted,

* and redistributed in source or binary form, so long as an

* acknowledgment appears in derived source files. The citation

* should list that the code comes from the book "Linux Device

* Drivers" by Alessandro Rubini and Jonathan Corbet, published

* by O'Reilly & Associates. No warranty is attached;

* we cannot take responsibility for errors or fitness for use.

*/

#include <linux/ioctl.h>

#include <linux/cdev.h>

#include <linux/semaphore.h>

/*

* Macros to help debugging

*/

#undef PDEBUG /* undef it, just in case */

#ifdef SCULLO_DEBUG

# ifdef __KERNEL__

/* This one if debugging is on, and kernel space */

# define PDEBUG(fmt, args...) printk( KERN_DEBUG "scullo: " fmt, ## args)

# else

/* This one for user space */

# define PDEBUG(fmt, args...) fprintf(stderr, fmt, ## args)

# endif

#else

# define PDEBUG(fmt, args...) /* not debugging: nothing */

#endif

#undef PDEBUGG

#define PDEBUGG(fmt, args...) /* nothing: it's a placeholder */

#define SCULLO_MAJOR 0 /* dynamic major by default */

#define SCULLO_DEVS 4 /* scullo0 through scullo3 */

/*

* The bare device is a variable-length region of memory.

* Use a linked list of indirect blocks.

*

* "scullo_dev->data" points to an array of pointers, each

* pointer refers to a memory page.

*

* The array (quantum-set) is SCULLO_QSET long.

*/

// #define SCULLO_ORDER 4 /* 16 pages at a time */

#define SCULLO_QUANTUM 4000 /* use a quantum size like scull */

#define SCULLO_QSET 500

struct scullo_dev {

void **data;

struct scullo_dev *next; /* next listitem */

int vmas; /* active mappings */

int order; /* the current allocation order */

int quantum; /* the current allocation size */

int qset; /* the current array size */

size_t size; /* 32-bit will suffice */

struct mutex mutex; /* Mutual exclusion */

struct cdev cdev;

};

extern struct scullo_dev *scullo_devices;

extern struct file_operations scullo_fops;

/*

* The different configurable parameters

*/

extern int scullo_major; /* main.c */

extern int scullo_devs;

extern int scullo_order;

extern int scullo_qset;

enum scull_type{

scullc=0,

scullp,

scullv,

};

extern int scullo_type;

/*

* Prototypes for shared functions

*/

int scullo_trim(struct scullo_dev *dev);

struct scullo_dev *scullo_follow(struct scullo_dev *dev, int n);

#ifdef SCULLO_DEBUG

# define SCULLO_USE_PROC

#endif

/*

* Ioctl definitions

*/

/* Use 'K' as magic number */

#define SCULLO_IOC_MAGIC 'K'

#define SCULLO_IOCRESET _IO(SCULLO_IOC_MAGIC, 0)

/*

* S means "Set" through a ptr,

* T means "Tell" directly

* G means "Get" (to a pointed var)

* Q means "Query", response is on the return value

* X means "eXchange": G and S atomically

* H means "sHift": T and Q atomically

*/

// // for scullc

// #define SCULLO_IOCSQUANTUM _IOW(SCULLO_IOC_MAGIC, 1, int)

// #define SCULLO_IOCTQUANTUM _IO(SCULLO_IOC_MAGIC, 2)

// #define SCULLO_IOCGQUANTUM _IOR(SCULLO_IOC_MAGIC, 3, int)

// #define SCULLO_IOCQQUANTUM _IO(SCULLO_IOC_MAGIC, 4)

// #define SCULLO_IOCXQUANTUM _IOWR(SCULLO_IOC_MAGIC, 5, int)

// #define SCULLO_IOCHQUANTUM _IO(SCULLO_IOC_MAGIC, 6)

// for scullp && scullv

#define SCULLO_IOCSORDER _IOW(SCULLO_IOC_MAGIC, 1, int)

#define SCULLO_IOCTORDER _IO(SCULLO_IOC_MAGIC, 2)

#define SCULLO_IOCGORDER _IOR(SCULLO_IOC_MAGIC, 3, int)

#define SCULLO_IOCQORDER _IO(SCULLO_IOC_MAGIC, 4)

#define SCULLO_IOCXORDER _IOWR(SCULLO_IOC_MAGIC, 5, int)

#define SCULLO_IOCHORDER _IO(SCULLO_IOC_MAGIC, 6)

#define SCULLO_IOCSQSET _IOW(SCULLO_IOC_MAGIC, 7, int)

#define SCULLO_IOCTQSET _IO(SCULLO_IOC_MAGIC, 8)

#define SCULLO_IOCGQSET _IOR(SCULLO_IOC_MAGIC, 9, int)

#define SCULLO_IOCQQSET _IO(SCULLO_IOC_MAGIC, 10)

#define SCULLO_IOCXQSET _IOWR(SCULLO_IOC_MAGIC,11, int)

#define SCULLO_IOCHQSET _IO(SCULLO_IOC_MAGIC, 12)

#define SCULLO_IOC_MAXNR 12Makefile

# Comment/uncomment the following line to enable/disable debugging

#DEBUG = y

ifeq ($(DEBUG),y)

DEBFLAGS = -O -g -DSCULLO_DEBUG # "-O" is needed to expand inlines

else

DEBFLAGS = -O2

endif

LDDINC=$(PWD)/../include

EXTRA_CFLAGS += $(DEBFLAGS) -I$(LDDINC)

TARGET = scullo

ifneq ($(KERNELRELEASE),)

scullo-objs := main.o mmap.o scull-shared/scull-async.o

obj-m := scullo.o

else

KERNELDIR ?= /lib/modules/$(shell uname -r)/build

PWD := $(shell pwd)

modules:

$(MAKE) -C $(KERNELDIR) M=$(PWD) modules

endif

install:

install -d $(INSTALLDIR)

install -c $(TARGET).o $(INSTALLDIR)

clean:

rm -rf *.o *~ core .depend .*.cmd *.ko *.mod.c .tmp_versions *.mod modules.order *.symvers scull-shared/scull-async.o

depend .depend dep:

$(CC) $(EXTRA_CFLAGS) -M *.c > .depend

ifeq (.depend,$(wildcard .depend))

include .depend

endifscullo_load/scullo_unload

#!/bin/sh

module="scullo"

device="scullo"

mode="664"

# Group: since distributions do it differently, look for wheel or use staff

if grep '^staff:' /etc/group > /dev/null; then

group="staff"

else

group="wheel"

fi

# remove stale nodes

rm -f /dev/${device}?

# invoke insmod with all arguments we got

# and use a pathname, as newer modutils don't look in . by default

insmod ./$module.ko $* || exit 1

major=`cat /proc/devices | awk "\\$2==\"$module\" {print \\$1}"`

mknod /dev/${device}0 c $major 0

mknod /dev/${device}1 c $major 1

mknod /dev/${device}2 c $major 2

mknod /dev/${device}3 c $major 3

ln -sf ${device}0 /dev/${device}

# give appropriate group/permissions

chgrp $group /dev/${device}[0-3]

chmod $mode /dev/${device}[0-3]#!/bin/sh

module="scullo"

device="scullo"

# invoke rmmod with all arguments we got

rmmod $module $* || exit 1

# remove nodes

rm -f /dev/${device}[0-3] /dev/${device}

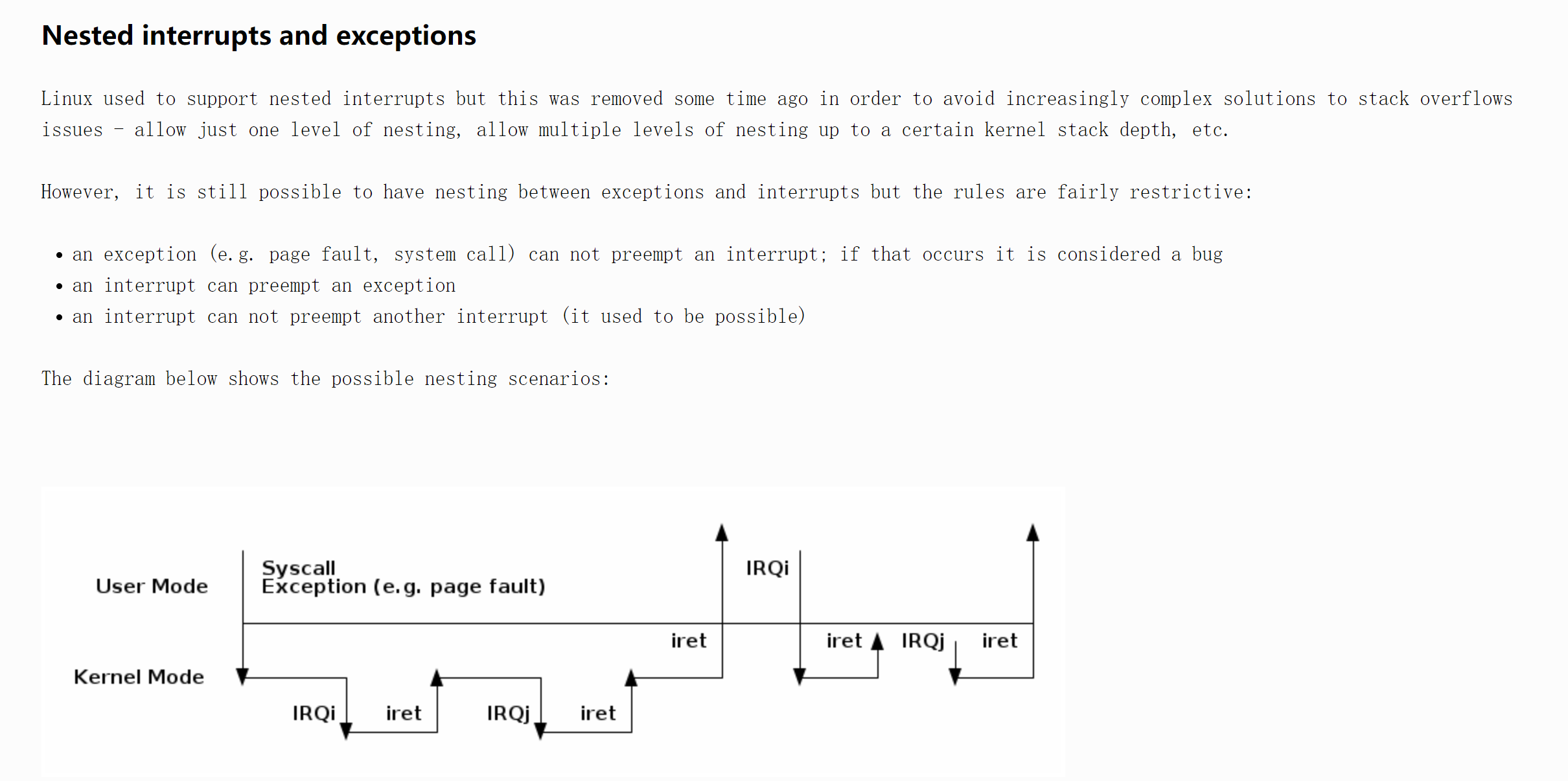

exit 0中断补充

关于中断嵌套,曾经是支持的(

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 R0gerThat!

评论