MIT6.191

SOME OF NOTES FROM THE LECTURE

Deep Generative Modeling

Generative Modeling Goal: Take as input training samples from some distribution and learn a model that represents that distribution

latent variable: They are not directly observable, but they are the true underlying features or explanatory factors

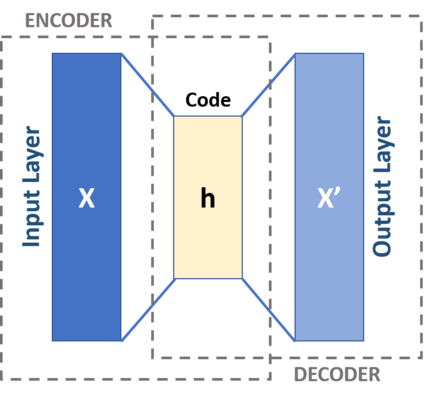

Autoencoder: An autoencoder is a type of artificial neural network used to learn efficient codings of unlabeled data (unsupervised learning)

variational autoencoders(VAEs):

Generative Adversarial Networks(GANs): GANs are a way to make a generative model by having two neural networks compete with each other.

Dropout Layer:

Double Descent:

Lipschitz:

Neural Tangent Kernels:(Jacot et al. 2018) is a kernel to explain the evolution of neural networks during training via gradient descent.

Effective dimension:

Liquid Neural Network(LNN):A Liquid Neural Network is a time-continuous Recurrent Neural Network (RNN) that processes data sequentially, keeps the memory of past inputs, adjusts its behaviors based on new inputs, and can handle variable-length inputs to enhance the task-understanding capabilities of NNs.